What is generative AI (GenAI) capable of?

Which new possibilities do Large Language Models (LLMs) like those used in ChatGPT, Microsoft Copilot and other applications open up? How is generative AI (GenAI) changing our (working) everyday life? Our colleagues will shed some light on this and other aspects in the following article. They discuss the opportunities offered by ChatGPT & Co within the corporate context, where there are (still) some limitations, and what possible solutions might look like.

What is ChatGPT?

ChatGPT is a chatbot developed in November 2022 by the US-based company OpenAI. This chatbot allows users to communicate with it via text-based messages, e.g., to have questions answered. The special thing about it is that the answers are generated with the help of Machine Learning (ML) and seem like the sort of natural responses a human might give.

GPT stands for “Generative Pretrained Transformer”. It is a manifestation of a Large Language Model that OpenAI developed back in 2018 and has been continuously refined further ever since. The different versions of ChatGPT are numbered, e.g., GPT-4, the version on which the fee-based version of ChatGPT is currently based.

GPT-3, GPT-4, GPT-n: What can the new versions do?

In March 2023 – less than six months since the release of ChatGPT based on GPT-3 – OpenAI had already introduced a new version of its series of language models, GPT4. There were two major innovations in particular:

- The first is that GPT-4 is the first multimodal model in the series. More specifically, this means that GPT-4 can process not only text but also images as input, for instance to answer questions about the content or even to match images and descriptions (potentially interesting in anti-fraud applications).

- Secondly, GPT-4 features a significantly larger context length of what is now up to 32,768 tokens (equivalent to about 25,000 words). Early Transformer-based models often had a context of 256 to 512 tokens; a length that is being quickly surpassed by many single ChatGPT responses today. The larger context offered by GPT-4 means that it can process significantly lengthier texts and, in a chat context, can still use answers from further back in time as well as automatically summarize them.

Even for a field that has advanced as rapidly as Artificial Intelligence in recent years, advancements in text-processing generative models at the moment feel fast-paced. It can therefore be assumed that further versions will follow in the near future, offering even more features – not least because further LLM solutions will intensify the competition.

What is GenAI?

There are now a large number of solutions based on different GPT versions that process image, sound and video in addition to Large Language Models. Whereas a few months ago ChatGPT was still used as a synonym for these solutions, the term generative AI or GenAI for short is now increasingly coming into use. This reflects the fact that many other players are entering the market alongside OpenAI, and that these include much more than just word processing.

Differentiating ChatGPT from other generative AI solutions

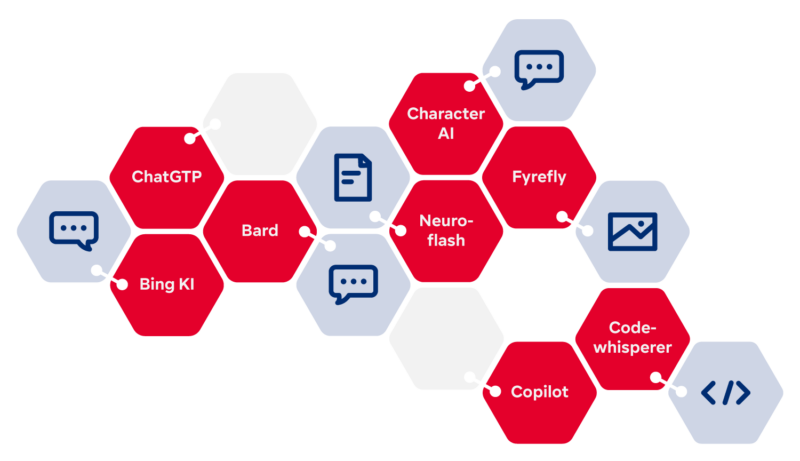

There are now numerous other chatbot products available besides ChatGPT, e.g., from providers such as Amazon, Google or DeepMind. What’s more, tools that generate images can now be found alongside the text-based products. Here is an overview of some of the currently most popular ones:

| Name | Provider | Functions |

| Bard | Text generation, Chatbot | |

| Bing KI | Microsoft | Chatbot integrated into Bing search |

| Character.ai | Noam Shazeer, Daniel De Freitas | Chatbot with any (also historical or fictional) characters |

| ChatGPT | OpenAI | Text generation, Chatbot |

| Codewhisperer | Amazon | Suggestions for the development and completion of code |

| Fyrefly | Adobe | Image generation & partial text generation |

| GitHub Copilot | GitHub, OpenAI | Suggestions for code development and code completion |

| Luminous | Aleph Alpha | Text generation |

| Microsoft Copilot | Microsoft | AI Assistant feature for Microsoft 365 applications such as Word, Excel, PowerPoint & Outlook |

| Midjourney | Midjourney | Image generation |

| Neuroflash | neuroflash | German language AI content tool that combines chatbot, text & image generator, SEO tool. |

* Since hundreds of new software solutions are currently being launched on the market every month, we do not claim this list to be exhaustive. If you have any questions about specific LLMs, please feel free to contact us.

Some of these products are only available in select countries, for select user groups only, or on a fee only basis. However, the functions and levels of availability are undergoing rapid change at present, as is the range of other comparable products. In addition, for many of these products there are now freely available open-source alternatives, some of which even run on end-user hardware such as cell phones or laptops.

Corporate LLM

Do you want a GenAI solution that you can safely deploy in your company while maintaining high performance? With Alan, the Comma LLM, which can be hosted in Germany, you get a privacy- and regulatory-compliant technology that allows you to use GenAI for your specific business purposes.

Advantages & limitations of ChatGPT in the corporate context

Text-based AI models have been capable of performing useful tasks in business processes for a number of years. We have applied it in a large number of customer projects, for example, to optimize input management or to improve fraud detection at insurance companies, to quickly detect trending topics in customer service, or to enable conversational AI. Besides the chatbot function – familiar to many from their day-to-day lives – ChatGPT has a number of other features such as text classification, information extraction, machine translation, automated text summarization, topic modeling, and more. Given the sheer amount of data ChatGPT has “seen” over the course of its training, and the size of the underlying AI model, ChatGPT can perform many of these tasks innately at an astonishingly high level. Notably, it does not need to be trained for this purpose on data that has been elaborately collected and prepared specifically for the task – as is the case with classical Machine Learning models.

Using this as a basis, companies could have ChatGPT perform numerous routine activities and – after human quality control – use the results in corporate processes. These could range from text suggestions for marketing and sales activities to business correspondence and documentation.

Where ChatGPT reaches its limits

So, the question remains as to why not all companies have been using ChatGPT to this end all along. The snag is that ChatGPT does not yet recognize company-specific texts and information. This gap cannot be closed (at this point in time) by retraining ChatGPT on these texts. The reason is that such an adaptation (transfer learning) would be more time-consuming for ChatGPT than for previous models, since ChatGPT was trained in parts with the RLHF method (reinforcement learning from human feedback). Here, in addition to pure text data, direct human feedback also flows into the development, the personnel input as well as the computing times of the servers would drive up the costs significantly. Up until recently, it was also disproportionately time-consuming. This has become somewhat easier now, so that in-house knowledge can now be fed into the system. Nevertheless, data protection aspects have to be taken into account (see ChatGPT vs. data protection & data security – what needs to be taken into account here?).

Dealing with the limitations of ChatGPT

There are ways to deal with these limitations. For one thing, it is of course still possible for companies to train completely individual AI models on their own data wherever it is available and easy to prepare. Alternatively, companies can have the best of both worlds by, for example, using an individually trained AI model to prepare their own data to fit a query to ChatGPT. This is useful, for example, wherever relevant passages from documents must first be identified before a specific question can be answered (see also Microsoft Tech Community). Furthermore, it is possible to automatically limit the information content of a request to ChatGPT, for example by anonymization or pseudonymization, in order to meet privacy and confidentiality concerns.

Generative AI vs. privacy & data security – what needs to be considered?

Anybody who is involved in the use of GenAI models such as ChatGPT inevitably also comes to the point of having to deal with the data protection and data security aspects these technologies entail. These aspects can be roughly divided into two areas:

- Privacy when an AI model is trained directly or indirectly.

- Data protection when using a “finished” model

Direct training of an AI model

For direct training (e.g., ChatGPT), the question is whether the service is legal with respect to the GDPR (or complies with applicable laws in the U.S. with similar objectives; some examples: Health Insurance Portability and Accountability Act [HIPAA], Children’s Online Privacy Protection Rule [COPPA], or California’s California Consumer Privacy Act [CCPA]). It is not clear how, for example, the “right to be forgotten” (Art. 17 GDPR) can be realized in an AI model. Once learned, information “stays” in the AI model. ChatGPT also does not offer the possibility of finding out whether data relating to a company or an individual is already stored and contained there; it is also not possible to have this data deleted (Art. 15 GDPR). Other critical aspects also include copyright issues: e.g., ChatGPT was partially trained on protected material which it can reproduce. OpenAI has not yet compensated anyone for the use of data. It is therefore unclear what the contextual integrity of information is, which can potentially be violated by an AI model. These aspects pertain first and foremost to OpenAI as the company behind ChatGPT.

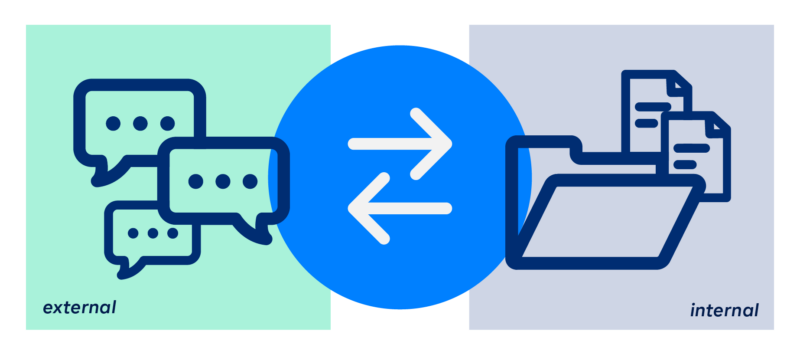

Indirectly training an AI model

Indirect training may occur as a result of the actual use of ChatGPT. OpenAI defines in its Privacy Policy, for example, that in addition to typical information such as cookies and device information, input and file uploads may also be collected and analyzed and presumably used to improve the service and AI model. This can pose a variety of privacy and data security issues. For one thing, the user may upload personal data that he or she is not permitted to process in this way. Secondly, non-personal data such as critical internal company information or intellectual property may also be affected. The worst-case scenario is that this information is used to “improve” the AI model, making it de facto public and difficult or nearly impossible to delete.

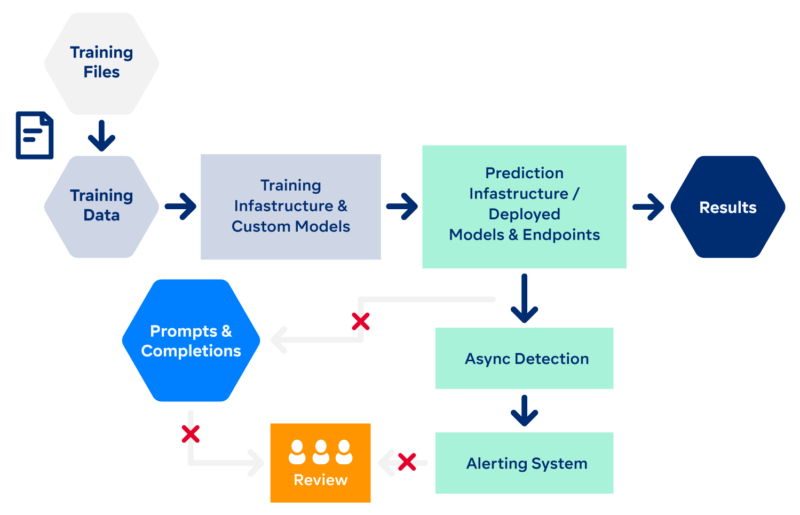

Use of “ready-made” AI models

The situation is different for AI services where ready-made models are only used or where optimized models trained with customer data are also only available to the customer him or herself, i.e., with a clearly defined client model. The Azure OpenAI Services can be mentioned here as an example. Models such as ChatGPT (or soon also GPT-4) can also be used here, but with considerably more control for the customer. Queries and responses to AI models in Azure OpenAI Services are according to Microsoft not used to improve the model. Instead, they are only stored for up to 30 days. In order to prevent misuse scenarios, direct intervention by authorized monitoring personnel and/or improvements to content management systems are possible, for example, which detect and prevent ethically questionable use. For highly regulated companies or use cases with particularly sensitive personal data, there is also an opt-out option here (provided Microsoft agrees) that prevents logging and potential review of input.

Even when fine-tuning and re-training models with your own data, it is guaranteed that they are protected. Training data can be stored encrypted in Azure Files. Models optimized with customer data are then only available to the customer themselves (authenticated and authorized, e.g., using Azure AD). In such a model, the customer has control over critical data. This means that the service differs only in a few places from other Azure and Microsoft 365 services such as Azure Files, Teams or SharePoint Online. The obligations for all these services remain the same: the company is responsible for which data it stores there (e.g., with regard to personal data) and for ensuring that company information does not become public.

The duty of care remains

All things considered; it remains to be said that the duty of care with regard to data when dealing with cloud AI services is at least as high as with all other types of cloud services. In case of doubt, a data protection officer should always be consulted or legal advice sought.

ChatGPT & ESG: What about the ecological footprint?

When discussing the opportunities and risks of generative AI like ChatGPT, the topic of sustainability is very much part of the discussion. However, since the technology is still quite new, reliable studies are few and far between. What does ChatGPT itself “say” about its environmental footprint? Here is the chatbot’s response:

Hello ChatGPT, I would like to know more about ChatGPT and sustainability. First of all: what issues need to be considered?

ChatGPT: There are several topics you could explore:

- Environmental impact: AI technologies like ChatGPT require significant amounts of power to run. You might consider exploring how AI companies are working to reduce their environmental footprint and make their products more sustainable.

- Sustainability potentials: ChatGPT can help companies and individuals make more sustainable decisions by for example, suggesting environmentally friendly products or services or providing information about sustainable practices.

- Ethical implications: As AI technologies become more prevalent, it is important to consider the ethical implications. How do we ensure that AI systems do not reinforce existing biases or make decisions that harm marginalized communities?

Very interesting points, thank you ChatGPT. In terms of environmental impact: How much energy was actually used to train ChatGPT?

ChatGPT: The training of ChatGPT-3, the largest version of the model, is estimated to have consumed about 284 terawatt-hours (TWh). By comparison: This is approximately equivalent to the energy consumption of the entire country of Belgium within one year. [Author’s note: ChatGPT is exaggerating a bit here. A recent study (Patterson et al., Carbon Emissions and Large Neural Network Training, 2021) estimates that GPT-3 consumes 1.3 terawatt-hours of electricity, a figure that ought not to be greater by two orders of magnitude for ChatGPT. GPT-4 is also not yet considered by ChatGPT in this response. Since this version is six times larger than GPT-3, a disproportionate increase in current consumption must be assumed.]

It is also important to remember that energy consumption is not the only factor to consider when considering the environmental impact of AI. Materials used to manufacture hardware, e-waste disposal, and carbon emissions associated with data centers all contribute to the overall environmental footprint of AI technologies.

How many tons of greenhouse gases were emitted to supply this energy?

ChatGPT: The estimated greenhouse gas emissions associated with ChatGPT-3 training are approximately 1.5 million metric tons of CO2e. [Author’s note: Again, ChatGPT is somewhat at fault here. To the best of our knowledge, the emission levels are probably not quite that drastic. According to the above-mentioned study, the training of GPT-3 is assumed to result in about 550 t CO2e, which is still 50 times the per capita annual emissions in Germany.]

These emissions, however, vary with a number of factors, including the energy mix of the power grid where the training takes place and the efficiency of the hardware used.

It is clear that the energy consumption and the associated greenhouse gas emissions generated by AI training are significant and should be taken into account when considering the environmental impact of AI technologies. Efforts are being made to reduce emissions.

Very interesting, what exactly are these efforts?

ChatGPT: An important concept is sparsity, which means that the model only needs a subset of the input data. This can be achieved, for example, by techniques such as pruning, which removes less important connections in the neural network. [Author’s note: This is a very effective approach where it is possible to cap large amounts of connections, making the model much more efficient. If you are interested, please do not hesitate to contact us.]

Another approach is to use more efficient hardware, such as specialized chips optimized for the particular AI application. These chips can be designed to perform calculations much more energy-efficiently than conventional CPUs or GPUs.

In addition, models can also be created by design with fewer parameters, which reduces energy consumption.

Thanks for the chat, ChatGPT.

Preliminary conclusion:

The answers that ChatGPT itself provides, as well as the additional study results, refer primarily to the training, and draw a rather negative eco-balance in this respect. It remains to be seen how things stand with regard to the operation and use of ChatGPT. Is this justifiable under the given sustainability aspects? What is the environmental footprint of alternative ways to get information (e.g., search engines) and develop ideas? Could a combination that makes sense from a sustainability perspective possibly be achieved by placing LLM behind search queries? New studies are needed to address these aspects in order to gain clarity.

GenAI in practice: Example: Microsoft 365 Copilot:

In March 2023, Microsoft announced a new tool: Microsoft 365 Copilot. The solution is a combination of a Large Language Model (LLM) and data from the Microsoft Graph developer environment. It is designed to assist in creating documents and presentations, reading and summarizing emails, analyzing data, and much more.

AI has been integrated into many Microsoft 365 apps for some time now and enables employees to do the following, for example:

- Automatic reply selection in Teams and Outlook

- Design proposals in PowerPoint

- Live subtitles in teams

With Microsoft 365 Copilot, an additional LLM is now being brought to Microsoft 365 apps such as PowerPoint, Excel, Word, Outlook, Microsoft Teams, Viva and SharePoint and is intended to provide further simplification and assistance.

Microsoft Copilot vs. isolated ChatGPT usage

Compared to the previous AI functions, more possibilities are added with Copilot. At the same time, it minimizes the risks that ChatGPT poses for companies.

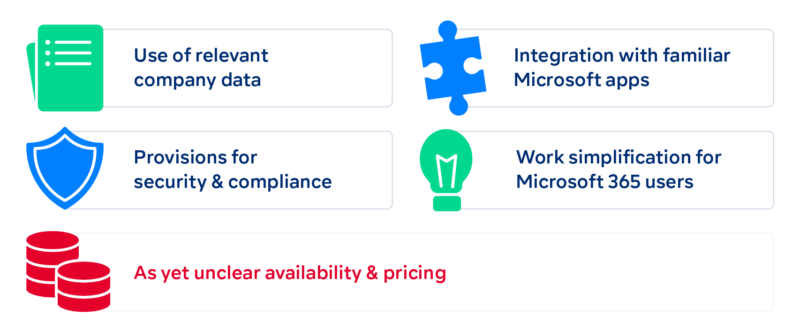

Use of relevant company data

The company’s own data and documents are used as input so that the results are relevant to the company and help the employees more effectively in their specific tasks compared to broad ChatGPT results.

Security and privacy

Copilot promises to meet Microsoft’s usual security standards and maintain security, compliance and privacy. This should minimize the risk of business secrets or personal data becoming available outside the company, for example.

Integration with familiar Microsoft apps

By extending it with LLM, the entire app functionality can be exploited. Employees no longer need to know individual commands or functions, but can use them by integrating LLMs with natural voice commands. This makes it easier, for example, to work with

- Excel: There is no longer a need to know specific Excel formulas. Copilot is supposed to select the necessary formula for the “calculate average” request, for example.

- PowerPoint: Transcripts and notes should be able to be used as the basis for presentations that Copilot creates from them.

- SharePoint: Responsive pages should be much easier to build with just a few prompts.

What about actual usability?

Copilot will gradually make its way into all of Microsoft’s productivity apps. To date, however, the solution is only available to a few selected customers and it has not yet been announced which functionalities will be available to other customers and when. The pricing and licensing costs are also still unclear.

What is becoming clear is that Microsoft 365 Copilot has the potential to make the digital world of work more accessible for employees – regardless of their level of knowledge, experience or ability. Instead of having to fully learn how to use software, only natural language requirements would be necessary. This would seem to be a very promising addition, saving employees time and training efforts and allowing them to focus more on content-related issues instead.

It remains to be seen when this will be the case across the board and how the new functionalities will be accepted in practice. Time will tell whether Copilot will revolutionize the world of office work or whether it will be a successor to the Office Assistant Clippit.

GenAI in practice: Medicine & healthcare example

How ChatGPT and other GenAI solutions can support the work of copywriters, designers, software developers and other knowledge workers is widely discussed. But what about in areas where employees do not exclusively sit at their desks, e.g. in the healthcare sector?

At this year’s DMEA – Connecting Digital Health, Karl Lauterbach – the German Federal Minister of Health – gave his take on the use of big language models in medicine: The Minister says that the digitization strategy presented at the beginning of March is already outdated because developments with Large Language Models (LLMs) are moving so fast.

The fact that the German healthcare system can only react slowly to these developments is understandable. Among the reasons for this are the complexity (from actors to regulation to tech infrastructure) and the low level of research investment in AI in Germany compared to other countries.

Nevertheless: The potential applications of LLMs such as ChatGPT in medicine and healthcare are manifold. The Minister also expressed his conviction at the DMEA that it will change medicine sustainably and fundamentally – and significantly more than existing AI models with specific capabilities, e.g., in the field of diagnostics or in medical imaging.

Examples of how LLMs could change medicine & healthcare:

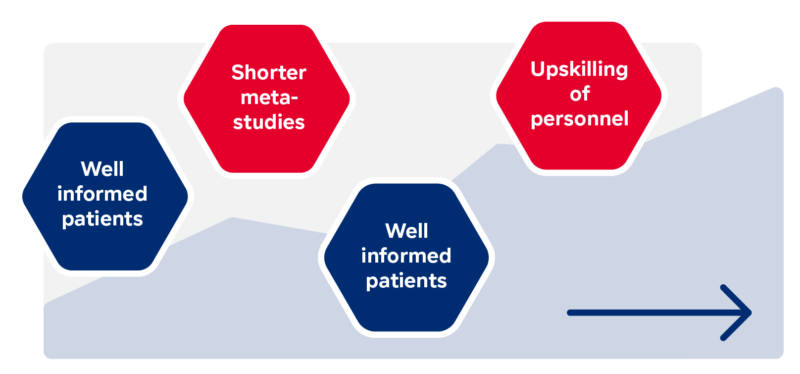

- Meta-studies: “There is no reason to believe that models could not do a meta-study of published medical studies better than humans,” Karl Lauterbach said at DMEA. On the contrary. In fact, given the large knowledge repository, LLMs may soon be much better at performing pooling studies. This new semantic component can take meta-studies to a qualitatively new level and accelerate the development of drugs and treatments.

- Empowered Patient: ChatGPT can provide users with expert knowledge in no time at all, which can be processed in an easily accessible way. This is how AI builds a bridge between experts and the population. This makes medical knowledge more accessible and reduces the so-called “knowledge gap”. The notion of the “empowered Patient” becomes a reality.

- Skills shortage: Prof. Dr. med. Klemens Budde from the Charité in Berlin added in his presentation at the DMEA that ChatGPT leads to better reporting results, predominantly among younger physicians, while chief physicians are less likely to embrace the support it provides. They criticize, among other things, that unused skills could be lost through ChatGPT. However, Budde also sees the opportunity for upskilling: similar to the empowered patient, knowledge is also made available outside groups with specialized expertise , which could represent a solution to the shortage of healthcare professionals.

When will GenAI be ready for use in the health sector?

As of now, the use of GenAI is far from being a matter of course. GenAI literacy – analogous to digital literacy – has so far been lacking among the general public, but also in specialist circles. Alongside skills such as prompt engineering, this also includes the reflective use of results and understanding of how they come about.

The reliance on generative AI and ChatGPT, as well as the ethical aspects in the generation and use of results, also raises the question of privacy. In the health sector in particular, this often involves sensitive data, the protection of which must first be guaranteed when it is processed by GenAI.

Before elaborate AI solutions can be discussed, the healthcare sector in Germany faces the challenge of modernizing telematics & IT infrastructure, replacing existing older systems, and networking facilities in a targeted manner. Without this solid foundation, AI & data-driven solutions are not operationally feasible.

What you can do in your company right now

Generative AI has the potential to fundamentally change our everyday lives and the world of work. We hope that the information above will provide you with some orientation regarding the possibilities and limitations of this technology.

If you are interested in putting LLMs and related AI technologies to work in your organization, we recommend an ideation workshop before you get started. In this session, we work together to determine how and where GenAI can specifically help you in your day-to-day operations. Guiding questions for this include:

- What processes exist that currently require a great deal of manual effort?

- In which process steps does a higher automation rate fail because the data quality is too low?

- In which processes must structure information be extracted from open text (e.g., emails)?

- Where is AI support needed in terms of processing image, audio or video material?

- In which areas would employees benefit from being able to find internal company information more quickly and easily?

- Where can employees be unburdened by being given suggestions for individual tasks that they only have to verify?

- What processes would run more efficiently if people only had to look at the really relevant cases?

You can find more information about possible next steps here:

GenAI solutions for businesses

What experience have you had with ChatGPT? Where do you see opportunities and where do you see challenges for its use in your company? Join us in the discussion: You can get in touch with us here.

We look forward to exchanging ideas with you and, if you wish, we will also support you in the actual implementation!